Multi Nodes

When I first tried kind, I was a bit surprised to see it creates by default a single Docker container to simulate a whole cluster. I was expecting it would use at least two containers to separate the Kubernetes control plane from a worker node, to mimic more closely a real Kubernetes environment. Anyway, Kind supports multi-node clusters. We can edit the kind configuration file to include more nodes.

Creating a cluster with multiple nodes¶

Starting from the kind.yaml of the last example, I tried creating a cluster with one

control plane and one worker node.

Now, there is something more worth considering. So far we ran the ingress controller

on the control-plane node. In a more realistic scenario, the ingress controller is

deployed on a worker node instead. With Kind, there is the complication that I want to

use extraPortMappings to map the ports from my host to the ports of a node. Therefore

I need a way to specify that the ingress controller should be provisioned on the worker

node with mapped ports. To achieve this, we can add extra labels to a worker node.

Let's say, ingress-node: "true" for the worker node that will run the ingress

controller and apps-node: "true" for the worker node with mounts that can run a

the Fortune Cookies app from the last example.

Let's delete the previous cluster and create a new one using the new configuration file.

Note how the third line now has three package emojis 📦 as the configuration file describes three nodes:

$ kind create cluster --config kind.yaml

Creating cluster "kind" ...

✓ Ensuring node image (kindest/node:v1.33.1) 🖼

✓ Preparing nodes 📦 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-kind"

You can now use your cluster with:

kubectl cluster-info --context kind-kind

Have a question, bug, or feature request? Let us know! https://kind.sigs.k8s.io/#community 🙂

And true enough, kind spinned up three Docker containers:

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2081234ce071 kindest/node:v1.33.1 "/usr/local/bin/entr…" 5 minutes ago Up 5 minutes kind-worker2

cd56624175d5 kindest/node:v1.33.1 "/usr/local/bin/entr…" 5 minutes ago Up 5 minutes 0.0.0.0:80->80/tcp, 0.0.0.0:443->443/tcp kind-worker

be74048526e3 kindest/node:v1.33.1 "/usr/local/bin/entr…" 5 minutes ago Up 5 minutes 127.0.0.1:34545->6443/tcp kind-control-plane

Configuring Node Selectors¶

Previously I deployed the ingress controller downloading

https://kind.sigs.k8s.io/examples/ingress/deploy-ingress-nginx.yaml directly, now I

need to obtain a copy of this file and modify it to ensure the controller is deployed on

the worker node with the label ingress-node: "true". To achieve this, we need to

specify a nodeSelector rule in the ingress controller deployment.

I applied a nodeSelector to each section describing containers, to deploy the ingress

controller on a node with the label ingress-node: "true".

The deployment should succeed. You can monitor it like described in the first exercise,

using kubectl.

Node selector on the cookies app¶

Let's redeploy the fortune cookies app using the modified cookies.yaml that includes

the node selector setting:

And finally let's deploy the ingress rules like in the previous example:

kubectl create namespace common-ingress

cd ssl

kubectl create secret tls neoteroi-xyz-tls \

--cert=neoteroi-xyz-tls.crt \

--key=neoteroi-xyz-tls.key \

-n common-ingress

cd ../

kubectl apply -n common-ingress -f common-ingress.yaml

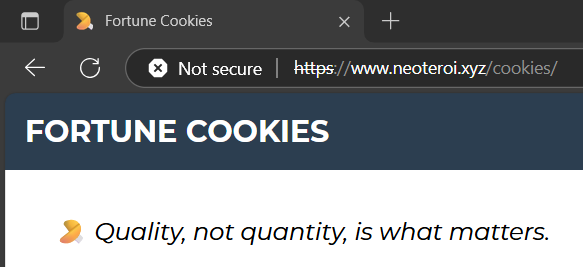

Hurray!¶

It works! https://www.neoteroi.xyz/cookies/

However, to be absolutely certain that the process is simulated on a worker node, let's check entering the correct container.

In the output, you can see:

- the node dedicated to the

control-plane - the worker node dedicated to the ingress, which is the one with ports mapped from the

host, named

kind-worker. - the worker node dedicated to running apps (with

apps-node: "true"label), namedkind-worker2, recognizable because it doesn't have mapped ports.

We can verify that the fortune cookies app is running in kind-worker2 by entering

the container and checking the processes:

And true enough, you can see that the uvicorn process is running in the kind-worker2

container, visible on lines 15-16 of the output below, matching the CMD parameter of

the demo apps Dockerfile.:

Following a restart…¶

Following a restart of the cluster, the system took several minutes to become responsive. This issue happened only once in several system restarts.

Initially it was giving a Gateway Time Out error, which is a common issue when the

ingress controller is not ready to handle requests.

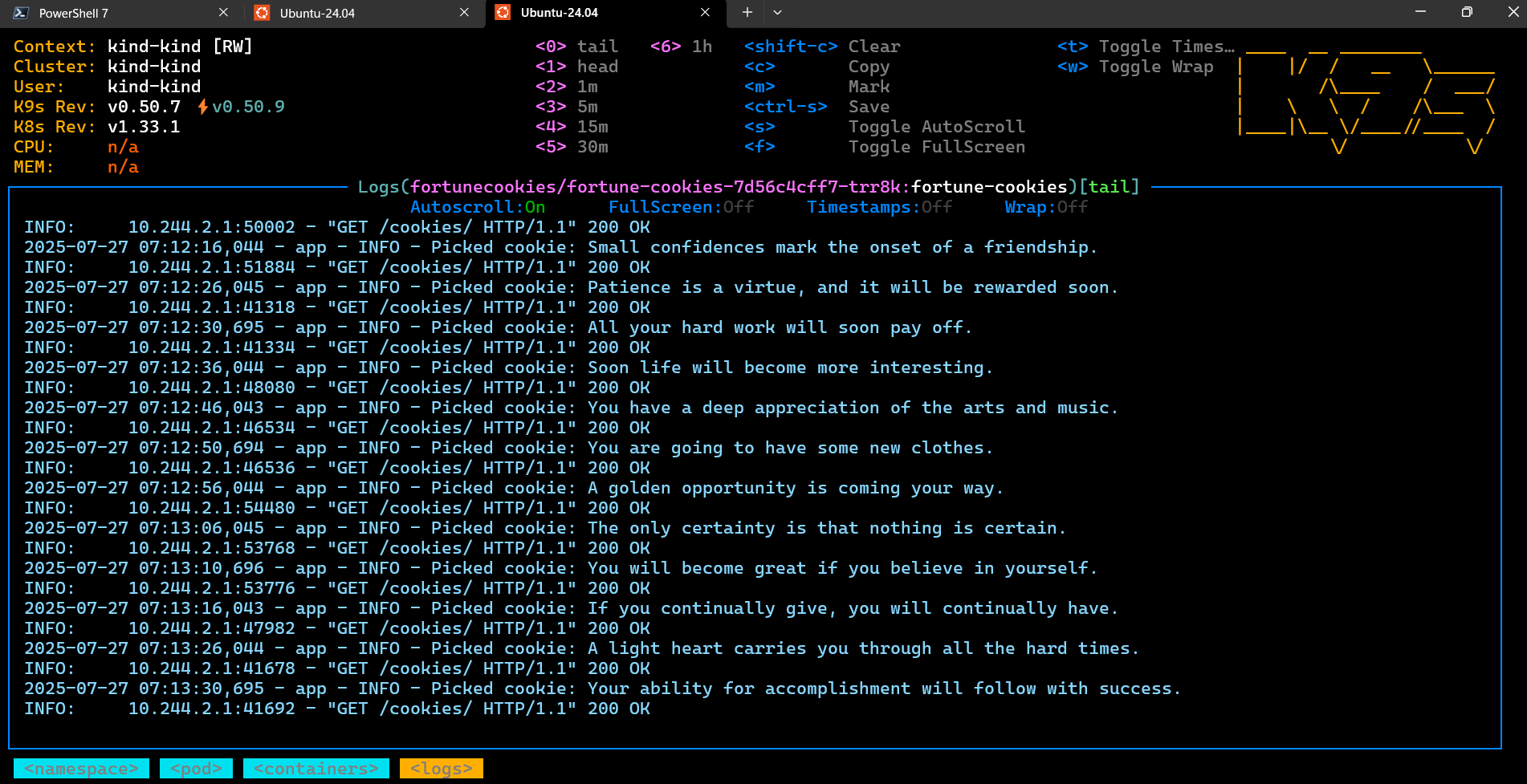

I verified the fortune cookies app was working and it started quickly, using the commands:

kubectl get pods -n fortunecookies

kubectl describe pod <pod-name> -n fortunecookies

kubectl logs -p <pod-name> -n fortunecookies

While I was investigating the status of the ingress controller, the system started working.

The logs of the ingress-nginx-controller showed that the error was caused by DNS

resolution issues, indicating that the NGINX ingress controller was having trouble

resolving the DNS name fortune-cookies.fortunecookies.svc.cluster.local using the

cluster DNS service at 10.96.0.10:53.

2025/07/27 07:05:13 [error] 47#47: *5409 lua udp socket read timed out, context: ngx.timer

2025/07/27 07:05:13 [error] 47#47: *5409 recv() failed (111: Connection refused), context: ngx.timer

2025/07/27 07:05:13 [error] 47#47: *5409 [lua] dns.lua:152: dns_lookup(): failed to query the DNS server for fortune-cookies.fortunecookies.svc.cluster.local:

failed to receive reply from UDP server 10.96.0.10:53: timeout

According to GitHub Copilot, this is a common issue with multi-node clusters in kind, where the DNS service may not be fully initialized or reachable at the time the ingress controller starts.

This issue, however, does not occur at every restart. In some cases, the system becomes available more quickly, likely depending on the order in which pods are spun up.

Chat with GitHub Copilot .

RP: Do you have any idea on why the cluster and the NGINX ingress took a very long time to start, following a system restart in my Multi-nodes Kind setup described in my documentation?

GitHub Copilot: Yes, this is a common issue with multi-node kind clusters, especially after a system restart. Here are the most likely causes:

-

Docker Network Initialization Delay After a system restart, Docker needs to re-create its internal networks and start all containers. Kind clusters rely on Docker networking, and sometimes the containers (nodes) start before the network is fully ready, causing delays in pod scheduling and communication.

-

Pod Scheduling and Node Readiness Kubernetes waits for all nodes to become "Ready" before scheduling critical pods like the ingress controller. If any node is slow to start (due to Docker or resource contention), the ingress controller and other pods may be stuck in a pending or initializing state.

-

Volume Mounts and HostPath Issues If you use

hostPathvolumes (as in your setup), and the host directories are not available or are slow to mount after a reboot, pods depending on those mounts may fail to start or restart repeatedly. -

Image Pull/Container Startup Delays If images are not cached locally or if there are issues with the container runtime, pulling images or starting containers can take longer after a restart.

-

Ingress Controller Probes The NGINX ingress controller uses liveness/readiness probes. If the controller starts before the network is fully functional, these probes can fail, causing Kubernetes to restart the pod or mark it as "Unhealthy" until the network stabilizes.

-

Resource Contention On local machines, especially with limited CPU/RAM, starting multiple Docker containers and all cluster components at once can overwhelm the system, leading to slow startups.

What you can do:

- Wait a few minutes after system boot before starting the cluster, to let Docker and

its networks fully initialize.

- Check docker ps and docker network ls to ensure all containers and networks are

up.

- Use kubectl get nodes and kubectl describe node <node> to check node readiness and

events.

- Review pod events with kubectl describe pod <pod> for clues (e.g., probe failures,

mount errors).

- Consider using kind delete cluster and kind create cluster --config kind.yaml

after a reboot if issues persist.

Summary: This is mostly due to Docker and Kubernetes components racing to initialize after a system restart, combined with the complexity of multi-node setups and hostPath mounts. It is a known limitation of running multi-node clusters with kind on local machines.

Summary¶

In this exercise, we created a multi-node cluster with kind and deployed the Fortune Cookies app on a dedicated worker node. We also configured the ingress controller to run on a separate worker node, allowing us to better simulate a realistic Kubernetes environment.

Although kind is primarily a tool for local development, the use of labels and node selectors to control pod placement is a fundamental concept in Kubernetes, and is also important to run production workloads.

The cluster takes much longer to start after a system restart, which is a common issue with multi-node kind clusters. This seems to be due to the time it takes for Docker to initialize networks and containers, as well as the Kubernetes scheduler waiting for all nodes to become ready.

We also saw how to inspect processes running in a container.